Meet Your New Boss: How AI Will Replace Clinical Research Jobs by 2028

AI isn’t “coming” for clinical research—it’s already delegating scheduling, data cleaning, SDTM mapping, site feasibility scoring, and even first-pass medical writing. By 2028, sponsors and CROs will rebalance headcount toward algorithm-supervised workflows, with humans owning escalation, exception handling, and stakeholder trust. This playbook shows which roles face the steepest automation pressure, where augmentation beats replacement, and how to pivot fast with trial-ready skills. Use the linked CRO directories, site maps, sponsor intel, and salary reports to make hard decisions now: reskill, specialize, or cross the value chasm before AI sets your comp bands.

1) The 2028 reality: replacement vs. radical augmentation

“Replacement” won’t look like pink slips overnight; it looks like workload compression where one coordinator does the output of three, guided by agentic copilots ingesting EDC logs, wearable streams, and unstructured PDFs. Low-judgment tasks—calendar chasing, duplicate checks, AE verbatim normalization, protocol-amendment diffing—shift to models, while humans still own protocol intent, risk trade-offs, and stakeholder alignment (PI, sponsor, IRB). To land on the winning side, anchor your growth to decision latency, exception-resolution speed, and cross-functional trust—the three metrics AI can’t earn without human credibility. Build real-world context through the Top-50 CROs worldwide, sponsor pattern-recognition from US sponsor insights, and throughput intelligence via APAC site capacity.

Then prove it with hard artifacts: a living deviation-decision log, 48-hour exception SLAs with root-cause notes, and PI feedback scores per site. Productize your value—publish reusable RBM signal playbooks, rationale memos, and context-of-use checklists for digital endpoints. Tighten language and coaching with the shared vocabulary in CRA monitoring terms and quick references from the Top-100 acronyms guide. Cross-train in central monitoring and endpoint validation so your name is attached to fewer errors, faster escalations, and timelines that actually hold.

| Role | % Task Automatable by 2028 | Tasks AI Eats First | Human Moat (Keeps You Employed) | Salary Pressure | Pivot Play (Actionable Move) |

|---|---|---|---|---|---|

| Clinical Research Coordinator (CRC) | 45–60% | Scheduling, visit windows, EDC checks | PI trust, consent nuance, escalation | Medium | Master RBM workflows; lead protocol literacy |

| Clinical Research Associate (CRA) | 40–55% | SDV sampling, deviation detection | Site coaching, investigator alignment | Medium | Move into central monitoring leadership |

| Data Manager | 60–75% | SDTM mapping, edit checks, deduping | Standard governance, curation of gold labels | High | Own data layer + quality KPIs |

| Biostatistician | 35–50% | Derivation code, routine models | Design choices, assumptions under uncertainty | Medium | Lead adaptive design & decision memos |

| Medical Writer | 55–70% | First-pass sections, summaries | Signal triage, regulator-facing narrative | High | Specialize in “hard” sections & responses |

| Safety Scientist / PV | 35–50% | Case triage, duplicate detection | Risk logic, aggregate signal causality | Medium | Own safety signal governance boards |

| Regulatory Ops | 50–65% | Dossier assembly, xRef mgmt | Agency relationships, negotiation | High | Be the “agency whisperer” |

| Site Start-Up (SSU) | 50–60% | Doc collection, template diffing | Local ethics nuance, contracts cadence | Medium | Specialize in fast-turn regions |

| Feasibility Analyst | 65–80% | Site scoring, volume forecasting | Qualitative PI intel, network trust | High | Own PI relationships across regions |

| eCOA/ePRO Lead | 45–55% | Form builds, schedule logic | Instrument validity, bias mitigation | Medium | Guard instrument integrity in DCTs |

| Central Monitor | 35–45% | Outlier surfacing, routine queries | Signal interpretation, site coaching | Low | Design KPI playbooks & escalations |

| Clinical Project Manager | 25–40% | Gantt updates, resource burndown | Stakeholder orchestration, trade-offs | Low | Own portfolio scenario planning |

| Medical Monitor | 20–35% | Case triage suggestions | Causality calls, ethics, liability | Low | Chair safety boards, educate sites |

| Clinical QA/Compliance | 40–55% | Checklist audits, SOP gap flags | Interpretation, quality culture | Medium | Lead AI quality governance |

| Supply/Logistics | 55–70% | Kit planning, cold-chain alerts | Exception routing with sites | High | Design exception playbooks |

| Training/L&D | 45–60% | Generic modules, quizzes | Role-specific coaching, live drills | Medium | Create role-tailored mastery tracks |

| TMF Specialist | 65–80% | Filing, version control | Inspection readiness, gap triage | High | Own inspection simulations |

| Payments/Finance Ops | 60–75% | Visit reconciliation, payouts | Edge cases, vendor disputes | High | Design fair-pay scorecards |

| RWE/Observational Analyst | 50–65% | Cohort pulls, propensity drafts | Confounding logic, causal rigor | Medium | Lead causal inference reviews |

| Biometrics Programmer | 55–70% | Derivations, listings, TLF shells | Edge-case programming & audits | High | Specialize in adaptive trials |

| Investigator/PI | 10–20% | Drafting, templated replies | Clinical judgment, patient trust | Low | Scale trials via site networks |

| Sub-I / Rater | 30–45% | Scripted assessments | Subtlety, inter-rater reliability | Medium | Own calibration and adjudication |

| Site Contracts | 50–65% | Template diffs, red-line patterns | Local law nuance, speed | High | Be the fast-turn specialist |

| Patient Recruitment | 60–75% | Audience/routing automation | Community trust, equity | High | Own hard-to-reach cohorts |

| Medical Affairs (MSL) | 25–40% | Briefing packs, KOL scans | KOL trust, live nuance | Low | Run scientific engagement boards |

| AR/VR/Digital Endpoint Lead | 30–45% | Scene builds, routine QA | Validation, context-of-use | Medium | Claim validated endpoints |

2) What AI will do better than most teams (and how to redirect your value)

a) Continuous reconciliation across silos.

Agents watch EDC deltas, eCOA timestamps, lab imports, and TMF filings simultaneously, flagging causal inconsistencies in minutes. Your edge becomes explainability: documenting why a deviation stands, how it impacts estimands, and which mitigation preserves integrity. Sharpen this language with terminology aligned to PI and CRA glossaries in PI terms and CRA monitoring terms.

b) First-pass writing that is “too perfect.”

AI drafts CSR sections, protocol synopses, and IB updates with sterile perfection—until a regulator asks “why did you choose this imputation?” Become the owner of rationale: risk registers, conditioning assumptions, and why alternatives were rejected. Keep a fast reference of trial acronyms with Top-100 acronyms in clinical research.

c) Feasibility forecasting at scale.

Models rank sites by historic enrollment, demographics, and contracting times. Your moat: PI trust and regional nuance—the on-the-phone intelligence models lack. Build those networks via Top-50 CROs worldwide and hedge geography with countries winning the clinical-trial race.

d) Risk-based monitoring triage.

AI spotlights “weirdness”; humans arbitrate meaning. Anchor central-monitor playbooks and escalation KPIs to the salary realities and staffing plans in Clinical research salary report 2025, including CRA salaries worldwide and CRC salary guide.

3) Replaceable tasks by role (and the upgrade path that pays)

CRCs. Replaceable: visit window math, pre-querying missing fields, travel/parking reminders. Upgrade path: participant journey owner—reduce screen-to-randomize time, build equity programs, and run adherence analytics that tie telemetry to outcomes. Borrow participant-facing micro-lessons from study environment and test-taking strategy patterns in proven strategies for exams.

CRAs. Replaceable: SDV sampling, auto-queries, deviation templates. Upgrade: central monitoring conductor—create risk signals, coach sites, and arbitrate which deviations matter. Align language with monitoring terms for CRAs.

Data Managers & Programmers. Replaceable: spec-driven transforms, duplicates, unit harmonization. Upgrade: data product owners—curate gold standards, version semantic layers, govern model drift. Cross-train on digital endpoints using wearables strategy in the Apple/Fitbit playbook: see trial geographies in APAC sites and cost hedges via CRO directory.

Medical Writers. Replaceable: first-pass IB/CSR boilerplate. Upgrade: regulator whisperer—author rationale memos, estimand narratives, and deficiency responses that pass scrutiny. Build muscle with sponsor context in US sponsor insights.

PV/Safety. Replaceable: case ingestion and dedupe. Upgrade: causality and aggregate signal boards; run global signal adjudication with ethics sensitivity across regions highlighted in countries winning the clinical-trial race.

Reg Ops & SSU. Replaceable: dossier assembly, red-line diffs. Upgrade: fast-turn specialists who crack stubborn authorities. Keep a live map of dependable throughput with APAC site capacity and push execution via Top-50 CROs worldwide.

Which AI impact worries you most for 2028?

4) Where humans still beat AI (and how to quantify it)

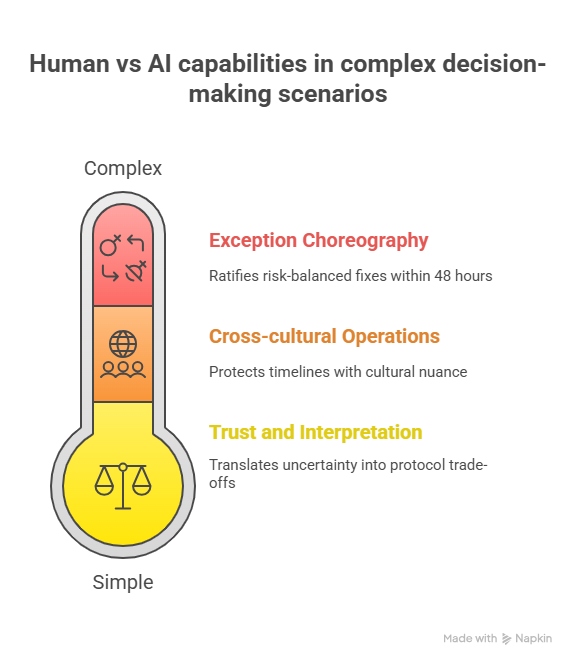

Trust and interpretation under ambiguity. Regulators don’t approve “high probability” decisions; they approve defended choices. Your leverage is decision memos that translate uncertainty into protocol trade-offs. Build a reference vault organized around PI terms in PI terminology and acronyms in Top-100 acronyms.

Cross-cultural operations. AI can rank sites, but it can’t earn a PI’s Saturday morning. Humans who close ethics surprises and contracting traps protect timelines; use throughput intelligence in APAC site directory and Brexit-era nuance in UK clinical research outlook.

Exception choreography. AI flags outliers; only humans can convene PI, sponsor, stats, and PV to ratify a risk-balanced fix within 48 hours. Document time-to-resolution as a KPI; defend budgets using the salary report in 2025 salary benchmarks and role-specific data like CRA salaries worldwide.

5) Career pivot map: 90-day reskill sprints that move comp bands

Sprint A: AI-literate RBM lead (CRAs/CRCs).

Master signal-driven SDV, deviation priority logic, and escalation trees. Build dashboards that track on-wrist adherence, missingness, and conditional power for digital endpoints. Cement site networks using Top-50 CROs worldwide and choose high-throughput geographies from countries winning the clinical-trial race.

Sprint B: Reg-writing specialist (Writers/Reg Ops).

Own justification memos and regulator Q&A. Build templates that tie estimands to operational realities (deviations, rescue paths). Keep sponsor context fresh via US sponsor insights.

Sprint C: Digital endpoints & DCT execution (Data/Clinical).

Pick one stack (wearables or XR). Validate context-of-use, freeze firmware/content, and define artifact controls. For site expansion, use APAC site directory and CRO redundancy from Top-50 CROs.

Sprint D: Safety signal boards (PV/Medical Monitors).

Automate case triage but humanize causality panels. Publish internal guidance on false positives, time-to-escalation, and patient-first language. For salary planning and hiring pitch, use PV growth & salaries report.

6) FAQs — the hard questions teams ask in 2025 (answered for 2028 reality)

-

TMF specialists, feasibility analysts, data programmers, payments ops, and first-pass medical writing see the steepest compression. Hedge by owning inspection readiness, PI relationships, and rationale memos. Benchmark alternatives and openings across organizations using Top-50 CROs worldwide.

-

Time-to-decision on deviations, screen-to-randomize compression, recruitment in tough geos from APAC site maps, and regulator acceptance of your memos. Price your value using the salary report 2025, CRA pay, and CRC salary guide.

-

Make copilots amplify you: you write the decision memo, the risk rationale, and host the PI alignment. Keep your language precise with PI terms and CRA monitoring terms.

-

Yes—AI quality governance, digital endpoint validation, central monitoring architecture, and participant engagement PMs. These cluster near sponsors in US sponsor ecosystems and fast-turn regions mapped in countries winning the clinical-trial race.

-

Risk communication, estimand literacy, equity-minded recruitment, and exception choreography across PI/sponsor/IRB/statistician. Package these with operational literacy using CRA terms and the acronyms in Top-100 guide.

-

Follow activation speed + patient availability. Start with APAC throughput in site directory, diversify with countries winning the race, and hedge sponsor exposure using US sponsor insights.