Clinical Trial Volunteer Registries & Platforms: Comprehensive Directory

Clinical trial enrollment is where budgets go to die. You can have a perfect protocol, a motivated sponsor, and a strong site network, then still miss timelines because the right participants never enter your funnel. Volunteer registries and recruitment platforms are the fastest way to add structured demand, but they can also create screen failure spikes, duplicate enrollment risk, privacy exposure, and unrealistic expectations if you choose blindly. This directory breaks platforms down by what they solve, how to vet them, and how to build a recruitment engine that survives audits and keeps enrollment predictable.

1) How volunteer registries and platforms actually work and why most teams misuse them

Volunteer registries and platforms are not magic pools of ready participants. They are distribution systems that connect a message to an audience, then filter that audience through eligibility, availability, geography, and willingness to follow study requirements. When teams fail, it is usually because they treat “more leads” as the same thing as “more randomized participants.”

The five hidden bottlenecks that platforms cannot fix for you

Eligibility mismatch caused by sloppy criteria interpretation. If your inclusion and exclusion criteria are written in a way that sites interpret inconsistently, platforms will flood you with the wrong traffic. Tighten endpoint clarity and operational definitions first. Use internal standards like primary vs secondary endpoints clarified with examples to reduce ambiguity, and align visit expectations with data capture rules in case report form best practices.

Screen failure driven by unrealistic pre screen logic. Platforms can pre screen, but they cannot replace clinical judgment or confirm medical record details reliably unless your workflow is designed for it. If you are seeing screen fail spikes, your root cause is often process design, not marketing. This connects directly to how sites operate in CRC responsibilities and certification and the oversight pressures described in CRA roles, skills, and career path.

Duplicate enrollment and professional volunteer behavior. Registries can attract participants who hop between studies, especially when compensation is a motivator. Your mitigation is not vibes. It is controls, identity verification processes, and consistent source documentation. Strengthen documentation discipline and traceability expectations using CRF definition, types, and best practices and tighten oversight using principles implied across clinical research monitoring career roadmaps.

Randomization and blinding pressure points. Recruitment touches trial integrity. When enrollment is rushed, teams cut corners, and that is how randomization mistakes and unblinding events happen. If your recruitment engine increases volume but lowers compliance, your study becomes fragile. Reinforce operational understanding using randomization techniques explained clearly and blinding types and importance.

Retention collapse after consent. Many platforms optimize for leads, not retention. If your protocol burden is high, retention will fail unless your site experience is designed with participant reality in mind. Use the strategic insights in patient recruitment and retention trends and operationalize them with the guardrails described in placebo controlled trials essentials.

What a “good” platform looks like in the real world

A platform is strong when it can prove:

Where the audience comes from and how it is refreshed

How consent and privacy are handled

How duplicates are prevented

How pre screening is validated

How diversity goals are supported without compromising data quality

To pressure test platform claims, compare them to the industry realities in the clinical research technology adoption report and the speed and scale pressures documented in emerging markets for clinical trials.

2) What to choose based on your enrollment problem

This is the decision logic that prevents wasted spend. Pick the platform category based on the specific failure mode that is killing your timeline.

If your issue is low top of funnel volume

You need reach, segmentation, and message testing. Direct to participant marketplaces, social channels, and search based recruitment can increase volume quickly. The danger is quality collapse. Reduce that risk by tightening pre screen logic and ensuring your eligibility language is consistent with how endpoints and visit requirements are understood internally. Use primary vs secondary endpoints to align what outcomes are being measured, then operationalize data capture rules using CRF best practices so you do not recruit people who cannot realistically complete the study.

When volume increases, monitoring load increases. If your oversight team is not ready, you get deviations and messy documentation. This is where the accountability described in CRA roles and skills matters, because recruitment is not separate from quality.

If your issue is high screen failure

High screen failure is a systems signal. Either your pre screen is weak, your criteria are too strict for your channel, or sites are not aligning eligibility interpretation. EHR cohort tools, hospital registries, and physician referral networks tend to reduce screen failure because eligibility is closer to clinical reality. When you do use broad platforms, improve pre screen specificity and enforce escalation rules for edge cases. This aligns with the operational discipline emphasized across CRC responsibilities and the process rigor needed for clean data capture in CRF definition and best practices.

If your issue is low diversity and access barriers

Diversity goals collapse when you rely only on academic centers and high income digital channels. Community clinic partnerships, multilingual outreach platforms, and mobile units can improve access. The trap is treating diversity as a marketing checkbox instead of an operational design problem. Retention, visit logistics, and burden matter. Use the field realities documented in patient recruitment and retention trends and align your participant experience with the compliance constraints of placebo controlled trial conduct.

If your issue is global recruitment pressure

Global trials add regulatory, cultural, and operational variance. The platform that works in one region can fail in another because trust dynamics differ. Use country and region aware approaches and anticipate market shifts described in Africa clinical trials predictions, India clinical trial boom, and China clinical research market predictions. If you recruit across regions without adjusting operations, you get inconsistent source, inconsistent consent comprehension, and unpredictable retention.

3) How to vet platforms for compliance, data integrity, and real enrollment conversion

Most vendors will show vanity metrics. You need answers that prevent the hidden disasters.

Ask these questions and do not accept vague answers

Where do participants come from, and how are they refreshed? Stale registries create repeated outreach to the same people, which increases no show rates. Ask for panel refresh frequency, opt in logic, and how they avoid recycling.

How do they handle identity and duplicates? If they cannot describe deduping logic, cross study checks, and identity validation, you are buying risk. Duplicates are not only a recruitment issue. They can become protocol deviations and data integrity issues that ripple into monitoring. That is why operational controls implied in clinical research monitoring roadmaps matter, because someone must detect and escalate these issues.

How do they validate eligibility beyond self report? Self report is useful, but it is fragile. Strong systems incorporate validation steps, physician confirmation workflows, or EHR signal checks. If your study has complex endpoints, eligibility requires clarity and consistent interpretation, grounded in primary vs secondary endpoints and documented cleanly through CRF best practices.

How do they reduce unblinding risk? Recruitment messaging can accidentally reveal study details that influence behavior. If your trial requires strong blinding integrity, demand controls that align with blinding types and importance and ensure messaging does not create bias.

How do they support retention? Ask what happens after referral. Do they provide reminder systems, transportation support, and participant education that reduces dropouts. Use the pain point data in recruitment and retention trends to demand retention minded workflows.

Verify their reporting like you would verify trial data

Demand a funnel view:

Impressions or outreach volume

Click or response volume

Pre screen completion

Eligible rate

Referred to site

Scheduled

Screened

Randomized

Then ask for the top three screen failure reasons and how they plan to reduce them. When vendors cannot answer, they are not accountable for enrollment, only for leads.

This is also where technology matters. Platforms powered by automation can increase speed but can also amplify mistakes. Cross check claims against the realities discussed in the clinical research technology adoption report so you do not buy hype instead of compliance.

4) How to operationalize registries and platforms at the site level without breaking quality

A platform can generate referrals, but sites convert them into enrolled participants. If you do not build a clean handoff system, sites will drown in admin work and quality will degrade.

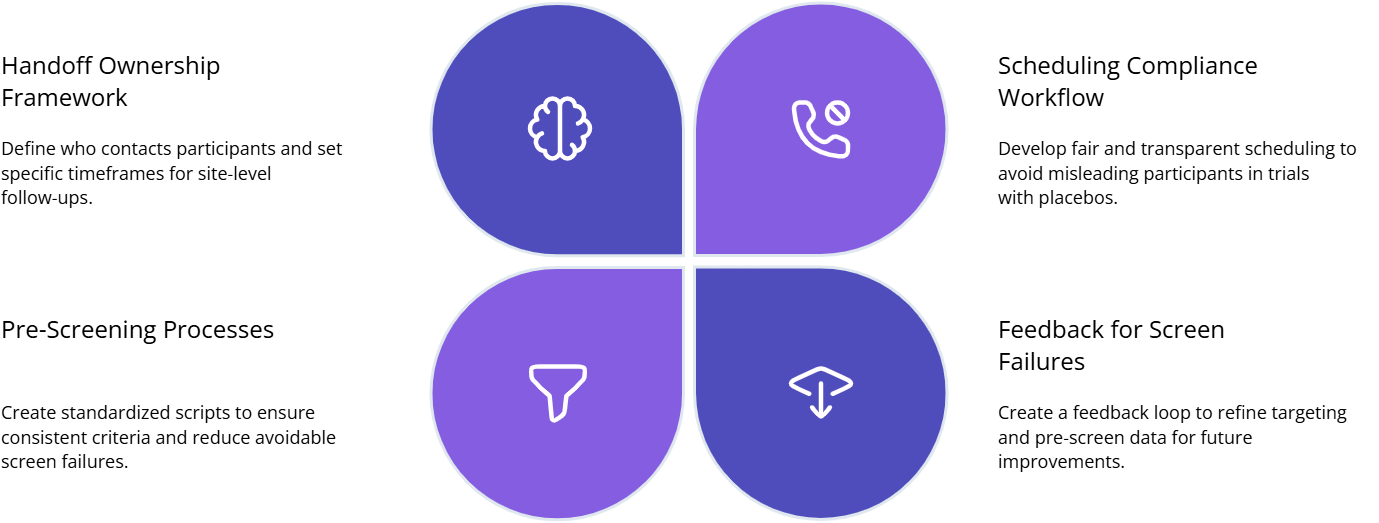

Build a registry to site handoff playbook

Define ownership and response time. Who contacts the participant, and within what timeline. If you leave it vague, referrals die. Map responsibilities using the workflow logic in CRC responsibilities and oversight expectations in CRA roles and skills.

Standardize pre screen scripts. Scripts prevent inconsistent interpretation of criteria. They also reduce screen failures. Make scripts consistent with endpoint definitions in primary vs secondary endpoints and ensure your data capture expectations match CRF best practices.

Create a compliance safe scheduling workflow. Participants who feel rushed or misled drop out. Build a process that explains requirements clearly without biasing expectations, especially when placebo arms exist. Use principles from placebo controlled trials essentials and protect integrity using blinding importance.

Install a screen failure feedback loop. Every screen fail reason should feed back into the platform’s targeting and pre screen. If you do not share reasons, the platform keeps generating the same wrong traffic.

Control the two high risk failure modes

Duplicate enrollment risk. This is operational. It requires identity checks, participation history questions, and staff training to flag patterns. If you ignore it, it becomes a monitoring and compliance problem that increases oversight pressure described in clinical research monitoring career roadmaps.

Unblinding through messaging. Recruitment messaging can accidentally reveal too much, leading participants to infer allocation or behavior changes. Audit recruitment copy against the rules implied by blinding types and importance and ensure your randomization process is understood by staff using randomization techniques explained clearly.

5) The best platform mix for common trial types and recruitment realities

A single platform rarely solves recruitment. Most winning strategies use a layered mix.

For Phase 1 and healthy volunteer heavy studies

Healthy volunteer databases and fast scheduling workflows matter most. The risk is over participation and motivated by compensation behavior. Use stricter verification and participation history checks, and enforce documentation discipline through the principles behind CRF best practices.

For chronic disease trials with long follow up

Retention wins enrollment. Use advocacy registries, clinic partnerships, and retention support layers. Tie your approach to real world evidence and patient experience trends described in RWE integration trends and the realities in recruitment and retention trends.

For global multi region trials

Use region aware registries, multilingual outreach, and clinic partnerships. Then adjust assumptions based on where clinical trial growth is accelerating, using emerging markets analysis and macro shifts described in Africa clinical trials frontier plus India clinical trial boom.

For trials with complex endpoints and high risk of protocol deviations

Use clinically grounded registries, EHR cohort tools, and referral networks that reduce screen failure. You must align everyone on endpoint definitions and capture rules. Reinforce internal clarity using primary vs secondary endpoints and strengthen data capture discipline with CRF definition and best practices. Then protect integrity with randomization techniques and blinding types and importance so recruitment speed does not damage trial validity.

6) FAQs: Clinical trial volunteer registries and platforms

-

A volunteer registry is typically a stored pool of people who opted in to be contacted about studies. A recruitment platform is a system that generates and routes interest through targeting, outreach, and workflow tooling. Registries can be higher trust but slower. Platforms can be faster but higher risk if verification and privacy are weak. Your decision should depend on whether you are solving volume, eligibility accuracy, or retention, aligned with real world insights from recruitment and retention trends.

-

They reduce screen failures by improving pre screen specificity, using clinically grounded data signals, and tightening routing so the right participants reach the right sites. The missing piece is your internal consistency. If criteria interpretation differs across sites, the platform cannot fix it. Align definitions using primary vs secondary endpoints and enforce clean capture rules through CRF best practices.

-

You need identity verification, participation history checks, and a process that flags suspicious patterns. Strong platforms can help, but sites must also follow consistent documentation practices and escalation rules. Treat duplicates as a compliance risk that affects oversight workload. This is why the responsibilities described in CRA roles and skills matter, because someone must detect patterns and push corrective action.

-

Yes. Messaging that reveals too much about interventions, expected outcomes, or arm differences can influence behavior and compromise integrity. Audit recruitment copy against the operational rules implied by blinding types and importance and ensure staff understand allocation processes using randomization techniques explained clearly.

-

The site must have a playbook: defined ownership, response time targets, standardized scripts, and a feedback loop for screen failures. Without this, referrals die and staff burn out. Ground the workflow in CRC responsibilities and certification and connect it to clean data expectations described in CRF best practices.

-

Start with the enrollment failure mode you expect. If it is volume, use high reach channels with strict verification. If it is eligibility accuracy, use clinically grounded registries and EHR based cohort tools. If it is retention, build advocacy and support layers. Then align the plan with current industry realities using the technology adoption report and the key drivers described in recruitment and retention trends.