Real World Evidence RWE Integration Trends in Clinical Trials 2025 Report

In 2025, Real World Evidence is no longer a side project. Sponsors are using it to cut protocol risk, sharpen feasibility, reduce screen fails, and defend endpoints when trials face messy reality. If your site or team still treats RWE as “extra,” you will feel it as more amendments, slower enrollment, and constant data reconciliation pain. This report breaks down the biggest RWE integration trends, the operational playbook teams are using, and the exact systems that keep RWE credible under audit and regulatory pressure.

1: Why RWE Integration Became a 2025 Clinical Trial Survival Skill

RWE became unavoidable because clinical trials are under pressure from every direction: tighter budgets, more complex protocols, slower enrollment, higher dropout, and growing demand for diverse populations. Sponsors are responding by using RWE to make smarter decisions earlier, similar to how teams rely on structured operational guidance in the clinical research blog library and pay attention to cost signals inside the clinical research salary report. When RWE is integrated correctly, it reduces waste, protects timelines, and prevents avoidable protocol chaos.

Trend 1: RWE is shifting from “supportive” to “decision driving”

In 2025, sponsors are using RWE to influence inclusion criteria, select endpoints that can be supported in real practice, and stress test assumptions before the first patient visit. This mirrors the mindset that separates strong operators in clinical data management career paths from teams that struggle with reactive cleanup. RWE is becoming part of the trial design conversation, not just a post hoc add on.

Trend 2: Feasibility is becoming RWE powered

Sites get burned when feasibility is based on “gut feel” and last year’s memory. RWE allows sponsors to model real patient counts, comorbidities, medication patterns, and visit frequency using EHR and claims. If you work in site operations, this trend impacts your daily workload the same way feasibility issues show up as staffing strain in clinical research administrator leadership tracks and sponsor expectations in clinical trial assistant advancement guides. It is a quality issue, not just a planning issue.

Trend 3: Data integrity standards are rising

RWE does not get a free pass just because it is “real world.” In fact, it creates new risk: missingness, inconsistent documentation, coding differences, and bias. That is why 2025 integration is tied closely to quality thinking in the QA specialist roadmap and documentation discipline found in clinical regulatory specialist pathways. If you cannot defend lineage, definitions, and transformations, you will lose trust fast.

Trend 4: RWE is becoming a career differentiator

Teams are hiring for people who can bridge clinical operations, data, and compliance. If you can translate RWE into practical trial decisions, you move into higher impact roles, similar to growth patterns seen in lead clinical data analyst roles and leadership tracks like clinical research project management. RWE literacy is turning into a promotion lever.

| Trend Area | What is Changing in 2025 | Operational Move That Works | Proof You Can Defend |

|---|---|---|---|

| Feasibility | EHR and claims drive site selection and projections | Model eligible counts with inclusion logic before startup | Feasibility memo with criteria mapping and counts |

| Inclusion Criteria | Criteria are refined based on real comorbidities | Run “screen fail simulation” using RWE cohorts | Screen fail risk table and mitigation plan |

| Endpoint Choice | Endpoints must align with real practice capture | Validate endpoint availability in source systems | Endpoint capture audit and gap list |

| External Controls | More trials plan RWE external control arms | Pre define comparators and confounders early | Comparability plan and confounding diagram |

| Data Linkage | Linking EHR, claims, labs, and registries is standard | Define linkage keys and match rules up front | Linkage spec and match rate report |

| Wearables | Consumer sensors used for supportive endpoints | Set device validation and missingness thresholds | Device QC plan and missingness dashboard |

| PRO Data | Remote PROs integrated with RWE for context | Use consistent schedules and reminder logic | PRO completion rates and deviation log |

| Data Quality | Fitness scores for RWE datasets become expected | Score completeness, timeliness, and plausibility | Data quality scorecard with thresholds |

| Bias Control | Regulators expect bias mitigation rationale | Document selection bias and measurement bias risks | Bias risk register and mitigation notes |

| Confounding | Confounder handling must be prespecified | Create confounder list tied to clinical logic | Confounder definition appendix and code set |

| Code Sets | ICD, CPT, NDC mapping errors cause drift | Version control code sets and change logs | Code set repository and audit trail |

| Data Lineage | Lineage must be traceable end to end | Maintain transformation logs and reproducible pipelines | Lineage diagram and pipeline run logs |

| Privacy | De identification plus governance is scrutinized | Use minimum necessary and document access controls | Access matrix and privacy impact assessment |

| Vendor Ecosystem | More multi vendor stacks increase integration risk | Define ownership of data fields and QC responsibilities | RACI chart and integration test results |

| Interoperability | FHIR adoption grows but data is still messy | Build normalization rules and exception handling | Normalization spec and error rate reports |

| Site Burden | Sites asked to support RWE extraction and mapping | Use standardized extraction checklists and timelines | Extraction logs and response SLAs |

| Regulatory Strategy | RWE is planned in protocols earlier | Align statistical plan and RWE plan from day one | Protocol section crosswalk and sign offs |

| Hybrid Trials | RWE complements interventional data capture | Define what is trial source versus RWE source | Data source matrix and reconciliation plan |

| Safety Signals | RWE used to detect rare events faster | Define signal thresholds and review cadence | Signal review minutes and escalation SOP |

| Recruitment | RWE used to identify referral networks | Map referral patterns and outreach targets | Referral map and outreach tracker |

| Diversity | RWE helps measure representation gaps | Track enrollment against local population data | Diversity dashboard and corrective actions |

| Adherence | Claims and pharmacy data inform adherence | Define adherence measures and intervention triggers | Adherence algorithm and monitoring log |

| HCRU | Health care resource use endpoints are common | Prespecify utilization definitions and time windows | HCRU definition sheet and examples |

| Cost Endpoints | Economic endpoints influence adoption narratives | Link cost capture rules to payer datasets | Cost capture plan and dataset documentation |

| Unstructured Data | Notes and imaging reports are mined cautiously | Validate extraction and set error thresholds | Validation results and sampling audit |

| RWE Monitoring | RWE gets monitored like trial data | Create monitoring plan with QC sampling | Monitoring reports and issue closure logs |

| Change Management | Dataset updates can change results | Lock dataset versions and document refresh policy | Version lock memo and refresh schedule |

| Audit Readiness | Auditors ask “show me the path” | Maintain reproducible outputs and documentation packs | Audit binder with lineage and QA evidence |

| Training | Teams need shared RWE literacy | Train ops, data, and regulatory on common terms | Training records and competency checks |

| Success Metrics | RWE ROI must be measured, not assumed | Track amendment reduction, screen fail reduction | Before after metrics and executive summary |

2: The 2025 RWE Integration Stack: Sources, Governance, and the Non Negotiables

The biggest shift in 2025 is that RWE integration is treated like a real program with ownership, controls, and measurable outcomes. Sponsors are building RWE pipelines the same way they build EDC and data management processes, which is why you keep seeing crossover with the skills emphasized in the top clinical data management platforms guide and the systems mindset found in a strong clinical data coordinator career path. If your team treats RWE as “someone else’s dataset,” you will inherit the mess when the first discrepancy hits.

1) Data sources are expanding, but the value comes from selecting fewer, better sources

Most teams fail by collecting everything. The winning teams select RWE sources that match the protocol decisions they need to make, and they document why. That documentation habit aligns with how compliance minded teams think in the regulatory affairs specialist roadmap and the clinical regulatory specialist pathway. In practice, sources often include EHR, claims, registries, lab feeds, pharmacy, wearable streams, and patient reported outcomes.

2) Governance is becoming the difference between “usable” and “unsafe”

In 2025, RWE without governance is a liability. Governance means access control, versioning, definitions, lineage, and documented decision rights. This is the same logic that protects quality programs in the QA specialist roadmap and keeps sponsor facing teams aligned in a structured clinical research administrator pathway. Without governance, teams spend months arguing about whose number is correct.

3) The new baseline is “defendable transformations”

RWE always requires cleaning, mapping, and normalizing. The trend in 2025 is that those steps must be defendable. If someone asks, “How did you convert source reality into analysis reality,” you need a clear answer. This connects directly to how monitoring pressure is described in the top remote clinical trial monitoring tools guide and how operational leaders protect timelines in the clinical research project manager salary guide. The point is not perfection. The point is reproducibility.

4) Sites are increasingly pulled into RWE workflows

This is a pain point that is quietly growing. Sites are asked to support extraction, mapping, and confirmation of EHR derived elements. If your site is not prepared, this becomes hidden labor that burns out coordinators, similar to the workload pressure described in the clinical research assistant salary outlook and the multi study stress patterns seen in clinical trial assistant advancement paths. The fix is a clear workflow with roles, timelines, and escalation rules.

3: The Highest Impact RWE Use Cases Across the Trial Lifecycle in 2025

The most valuable trend is that RWE is being used at multiple points in the lifecycle, not just at the end. Sponsors are building “RWE touchpoints” that reduce risk, increase certainty, and strengthen the story around outcomes. This is similar to how a strong drug safety specialist career guide builds safety thinking across processes, not in isolated steps.

Use case 1: Design validation before first patient in

Teams use RWE to validate assumptions about baseline event rates, standard of care, and patient journey patterns. If your protocol assumes a visit schedule that does not match real care, you will get deviations, missed windows, and dropouts. That burden shows up as rework at the site level, which is why operational leaders lean on structures like the clinical research administrator pathway and training discipline in creating the perfect study environment. RWE helps you build a protocol that fits reality.

Use case 2: Enrollment targeting and screen fail reduction

RWE powered feasibility can identify where eligible patients actually are, which providers see them, and which comorbidities trigger screen fails. This reduces the painful cycle of “open more sites” without fixing the eligibility mismatch. It also aligns with resource planning and compensation signals in the clinical research salary report and the staffing reality described in the top highest paying clinical research jobs. Fewer screen fails means less wasted coordinator time and faster timelines.

Use case 3: External control arms and historical comparators

More 2025 protocols are exploring external controls using RWE, especially when randomization is difficult or unethical. This approach can create faster timelines, but it introduces serious bias risk. The teams that do it well treat it like a regulatory and quality program, which is why their planning resembles the discipline in regulatory affairs associate guides and clinical regulatory specialist pathways. If comparability is weak, the entire story collapses.

Use case 4: Safety signal detection and risk monitoring

RWE is used to detect rare adverse events and to contextualize safety findings. This overlaps with pharmacovigilance workflows and the skills described in the pharmacovigilance associate career roadmap and the leadership responsibilities in pharmacovigilance manager career steps. When a signal appears, you need a defensible method for defining the event, confirming capture, and escalating appropriately.

Use case 5: Endpoint validation and pragmatic outcome capture

Trials are increasingly judged on whether outcomes are meaningful and measurable in real practice. RWE helps validate whether endpoints are captured consistently and whether they represent actual patient benefit. This is tied to the way senior clinical roles build credibility in the clinical medical advisor career path and how sponsor facing roles like the medical science liaison roadmap translate evidence into decisions. If your endpoint cannot survive real world measurement, it becomes a debate, not an outcome.

4: The 2025 Operational Playbook for RWE Integration Without Breaking Trial Quality

The biggest lie teams tell themselves is “RWE will save time.” RWE saves time only when integration is designed to reduce rework. Otherwise, it adds complexity, creates more meetings, and produces debates about which dataset is correct. The playbook below is built around quality first execution, using the same discipline you see in the QA specialist roadmap and the operational maturity described in the clinical research administrator pathway.

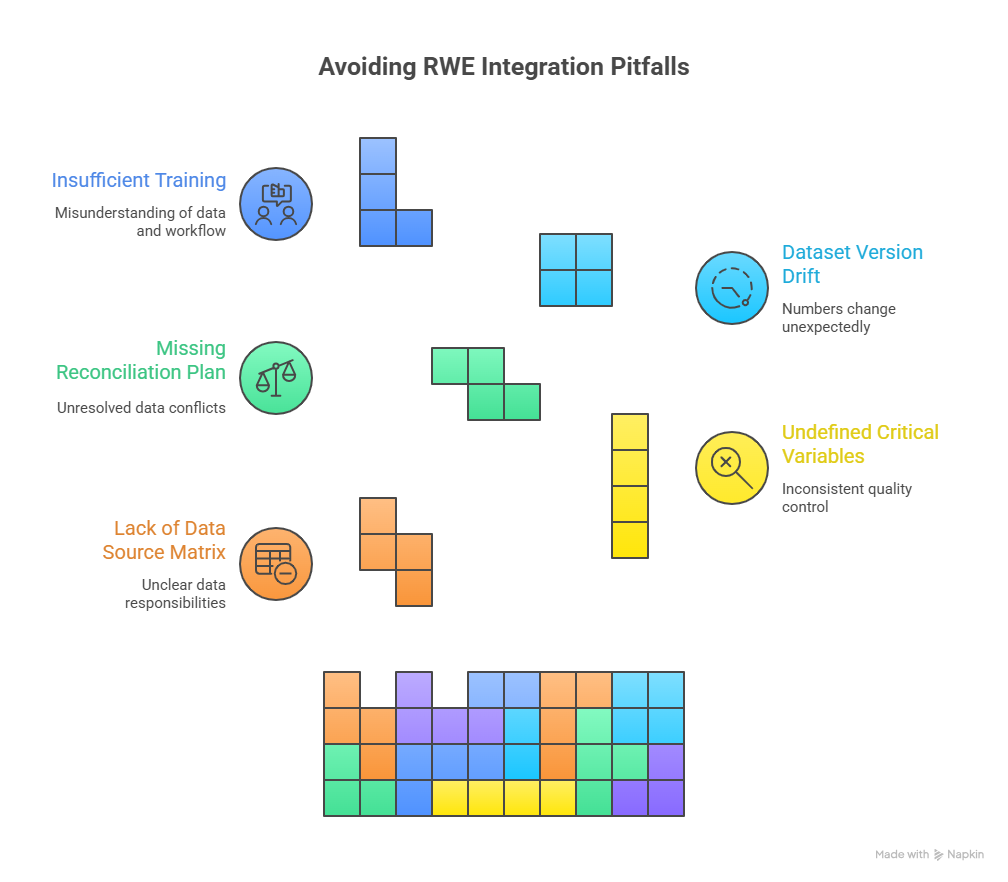

1) Start with a Data Source Matrix, not a vendor pitch

Every integration should begin with a matrix that lists each data element, the source of truth, the refresh frequency, and who owns QC. This mirrors how strong teams structure responsibilities in the top contract research vendors guide and avoid tool chaos described in the remote monitoring tools guide. Without a matrix, everyone assumes someone else is checking the data.

2) Define “critical variables” that get audit level controls

Not everything needs the same control level. Define critical variables tied to primary endpoints, safety, and key eligibility. Apply stricter QC to those variables and lighter QC elsewhere. This is the same risk based thinking that sponsors expect in compliance heavy workflows described in the clinical regulatory specialist pathway and in structured planning found in regulatory affairs associate guides. You protect what matters most.

3) Build a Reconciliation Plan before the first discrepancy happens

You will see conflicts between trial data and RWE. The question is whether you planned for it. Define how discrepancies are detected, triaged, and resolved, and who has decision authority. This is similar to how data heavy teams prevent drift in clinical data management platform workflows and how study leaders reduce risk in the clinical research project manager salary guide. Without a reconciliation plan, you lose weeks to argument.

4) Freeze dataset versions like you freeze protocol versions

Version drift is a silent killer. If your dataset refreshes mid analysis, your numbers change and trust collapses. Lock dataset versions and document refresh rules. This “version discipline” is aligned with the reliability standards expected in clinical data manager career roadmaps and the audit mindset seen in QA specialist pathways. No one wants a story that changes every two weeks.

5) Train the humans, not just the system

RWE fails when operations do not understand what the data means, and data teams do not understand the trial workflow. Train both sides on shared definitions and shared risk points. This is the same principle behind improving performance using proven test taking strategies and building consistent routines in the perfect study environment guide. Shared language prevents expensive misunderstanding.

5: What 2025 RWE Integration Means for Roles, Metrics, and Career Growth

RWE is changing who gets hired, who gets promoted, and who becomes essential to sponsors. Teams need people who can connect clinical reality to data reality and keep it defendable. That is why you see RWE skills overlapping with growth paths in lead clinical data analyst roles, sponsor facing roles like the medical science liaison roadmap, and safety roles like the pharmacovigilance specialist salary growth report. RWE is not replacing these roles. It is reshaping them.

The roles most affected by RWE integration

Clinical Operations and CRC teams: More requests to support EHR extraction, confirm variables, and explain missingness patterns. This connects to workload realities described in the clinical research assistant career roadmap and the advancement pressure in the clinical trial assistant career guide.

Data Management: RWE integration pushes data managers into external data pipelines, mapping, lineage, and reconciliation, matching the expanded scope described in the clinical data manager roadmap.

Regulatory and Quality: RWE plans increasingly require protocol alignment, documentation, and defensible methods, which fits the mindset of the regulatory affairs specialist roadmap and the QA specialist pathway.

Safety and PV: RWE is used for signal detection and contextualization, pushing PV teams to expand their datasets, aligning with the pharmacovigilance associate roadmap and leadership steps in pharmacovigilance manager pathways.

The metrics sponsors use to judge RWE success in 2025

If you want leadership to fund RWE, you need measurable ROI. The most used metrics include:

Reduction in protocol amendments tied to feasibility errors

Reduction in screen fail rates due to better targeting

Faster enrollment due to improved site selection

Faster query closure through clear variable definitions

Fewer data disputes due to lineage documentation

These metrics connect to operational performance and career leverage, similar to the way compensation and responsibility patterns appear in the clinical research salary report and the “responsibility premium” shown in the top highest paying clinical research roles. When you can quantify impact, you become hard to replace.

How to become “RWE fluent” without turning into a statistician

You do not need to run complex models to be valuable. You need to understand definitions, bias risks, and operational implications. A simple path:

Learn core RWE terms and common biases

Practice mapping variables from clinical reality to dataset fields

Build comfort with lineage and version control expectations

Learn how RWE changes feasibility, recruitment, and endpoint capture

This kind of structured learning fits well with the discipline taught in proven test taking strategies and the routines described in creating the perfect study environment. It is about habits and clarity, not academic theory.

6: FAQs About Real World Evidence Integration in Clinical Trials in 2025

-

The biggest trend is that RWE is planned earlier and used to make design decisions, not just support a narrative later. Sponsors are using RWE to refine criteria, validate endpoints, and improve feasibility. That shift increases pressure on governance, documentation, and data quality, which is why RWE now overlaps heavily with disciplines in the clinical regulatory specialist pathway and the QA specialist roadmap. Teams that treat RWE like a controlled program see fewer disputes and fewer delays.

-

They use RWE to stress test the protocol against real clinical practice. That includes verifying patient availability, comorbidities, medication patterns, and whether endpoints are captured consistently. When those assumptions are validated early, fewer amendments are needed later. This is the same risk prevention logic used in strong operational programs like the clinical research administrator pathway and the systems discipline taught through structured resources in the clinical research blog library. Fewer amendments means less reconsent, less retraining, and fewer timeline hits.

-

The biggest risks are biased cohort selection, inconsistent variable definitions, missingness, linkage errors, and version drift. These risks do not always look dramatic at first, but they quietly destroy trust in results. That is why best practice approaches resemble the audit readiness mindset in the QA specialist roadmap and the defensible documentation expectations in the regulatory affairs specialist roadmap. If you cannot explain how the dataset became analysis ready, you invite delays and rework.

-

RWE can support external controls in some situations, but it does not “replace” randomization as a default. The decision depends on disease context, ethical constraints, data availability, and comparability strength. When RWE is used for external controls, bias risk and confounding control become central, and those plans must be prespecified and defendable. The planning discipline here often mirrors compliance oriented thinking in the clinical regulatory specialist pathway and sponsor communication standards seen in the clinical medical advisor career path. The best teams treat it like a major program, not a shortcut.

-

Sites are increasingly asked to support extraction, confirm data elements, and address discrepancies between trial source and RWE source. If the workflow is unclear, this becomes hidden labor that burns coordinators and delays closeout. That pressure connects closely to how workload realities show up in the clinical research assistant salary outlook and advancement stress described in the clinical trial assistant career guide. The fix is to demand a clear data source matrix, timelines, and escalation rules before work starts.

-

Build skills in variable definition clarity, documentation discipline, discrepancy triage, and cross team communication. You should be able to translate what happens in a visit into how it should appear in a dataset, and you should understand the risk of missingness and inconsistent capture. Those skills align naturally with growth paths in clinical data coordinator careers and leadership oriented tracks like the clinical research administrator pathway. When you can bridge ops and data, you become essential.

-

They maintain lineage, version control, quality scorecards, and clear documentation of transformations, definitions, and decision points. They also prespecify how RWE supports endpoints, comparators, or safety monitoring. This approach looks similar to controlled documentation programs described in the QA specialist roadmap and regulatory planning discipline in the regulatory affairs associate guide. The goal is not to claim the data is perfect. The goal is to prove the process is defensible.