The Rise of Wearable Tech: How Apple and Fitbit Will Power Future Clinical Trials

Apple Watch and Fitbit have crossed the line from lifestyle gadgets to trial-grade telemetry: minute-level heart rate, HRV, sleep, mobility, arrhythmia flags, and task prompts that compress timelines, cut site burden, and raise statistical power. The inflection isn’t marketing; it’s endpoint maturity, regulatory precedents, and workflow integrations that make risk-based monitoring practical. This playbook shows where wearables deliver decision-quality data, how to validate them rigorously, and how to design hybrid/virtual studies that actually recruit and retain. To widen options, map capacity growth in Africa, India, APAC sites, and shortlist partners from the global CRO directory.

1) Why Apple & Fitbit are now clinically useful—beyond “nice to have”

Three converging shifts made Apple/Fitbit endpoints decision-grade: continuous passive capture, validation routes, and workflow integration into eCOA/RBM stacks. Continuous HR/HRV/motion/sleep streams provide richer variance than monthly vitals, enabling fewer visits or smaller N without losing power. APIs and technical notes now support pre-specified confirmatory analyses and calibration sub-studies. Finally, app ecosystems hook into eConsent/ePRO, allowing just-in-time prompts and metadata reconciliation during monitoring; tighten lexicon with CRA monitoring terms and keep teams aligned using the acronyms reference.

Operationally, wearables pair well with distributed site networks. If you’re expanding outside the US, compare incentive structures and IRB norms across countries winning the trial race. For partner selection, pull candidates from the global CRO directory and benchmark sponsor sophistication with US sponsor insights. These linkages reduce onboarding friction, which is the silent killer of first-in-class remote protocols.

| Clinical Measure | Device Modality | Trial Use Case | Validation/Reg Risk | Data Cadence | Red Flags |

|---|---|---|---|---|---|

| Resting heart rate | PPG | Cardio-metabolic risk trend; baseline stability | Low | Daily avg | Acute illness confounds |

| Heart rate variability (RMSSD) | PPG | Stress/load recovery, sleep quality markers | Moderate | Nightly windows | Motion artifacts; sampling windows differ |

| Atrial fibrillation (irregular rhythm) | PPG + single-lead ECG (Apple) | Screening/monitoring in post-market studies | Low–Moderate | Event-driven | False positives without confirmatory ECG |

| Step count / activity minutes | IMU (accel/gyro) | Mobility endpoints in metabolic & oncology | Low | Per minute | Device wear adherence |

| Gait speed / cadence | IMU | Frailty, neurodegenerative disease function | Moderate | Bout-based | Terrain variability; pocket vs wrist |

| Six-minute walk proxy | IMU + GPS | Cardio-pulmonary functional capacity | Moderate | Task events | Outdoor vs indoor comparability |

| SpO₂ (spot) | PPG (red/IR) | Nocturnal hypoxia signals; respiratory trials | High | Event/spot | Skin tone, perfusion sensitivity |

| Sleep stages (light/deep/REM) | PPG + IMU | Sleep interventions; mental health correlates | Moderate | Nightly | Stage classification variance vs PSG |

| Total sleep time / efficiency | PPG + IMU | Insomnia endpoints, shift-work studies | Low–Moderate | Nightly | Nap detection; watch off-wrist |

| Irregular breathing rate | PPG | Respiratory infection recovery monitoring | Moderate | Nightly | Algorithm drift; body position bias |

| Body temperature proxy | Skin temp sensor (select models) | Cycle tracking; infection onset signals | High | Nightly | Ambient influence; sensor location |

| Arrhythmia burden (episodes/day) | PPG + confirm ECG | Antiarrhythmic therapy monitoring | Moderate | Event-driven | Participant confirmation compliance |

| Fall detection | IMU | Safety signal in geriatric/oncology trials | Low | Event-driven | False alarms during sports |

| Sedentary ratio | IMU | Behavioral endpoints in metabolic disease | Low | Hourly | Desk-job confounding |

| VO₂max estimate | PPG + IMU models | Cardio-fitness responder analysis | Moderate | Weekly | Requires sustained outdoor bouts |

| Stress score (composite) | HRV-based model | Psych-cardio studies; burnout programs | High | Daily | Model transparency; placebo sensitivity |

| Menstrual cycle phase | Temp + symptom inputs | Women’s health stratification | Moderate | Daily | Manual input adherence |

| Energy expenditure | IMU + HR models | Weight-loss interventions | High | Daily | Population calibration mismatch |

| Hand tremor amplitude | IMU (high-rate) | Parkinson’s motor symptom tracking | Moderate | Task events | Sampling rate limits on some models |

| Medication adherence proxy | Routine + HR changes | Signal anomalies post-dose windows | High | Daily | Ethical/privacy considerations |

| Skin perfusion trends | PPG amplitude | Peripheral vascular disease studies | High | Per minute | Temperature & fit artifacts |

| Respiratory rate (sleep) | PPG | Post-viral recovery endpoints | Moderate | Nightly | Motion sensitivity |

| Oxygen desaturation index | PPG (select models) | OSA screening in feasibility phases | High | Nightly | Needs clinical confirmation |

| Biobehavioral rhythms | Composite (HR/HRV/activity) | Mood disorder relapse prediction | High | Hourly | Model validation |

| Passive ePRO triggers | Anomaly detection | Prompt symptom surveys at the right time | Low–Moderate | Event-driven | Alert fatigue |

| Geofenced mobility | GPS | Exposure/routine mapping in COPD | High | Bouts | Privacy & IRB constraints |

2) From hypothesis to analysis plan: a rigorous validation blueprint

Use the readiness matrix above to select endpoints, then lock a three-layer validation plan: device-level, algorithm-level, and context-of-use.

Device-level. Confirm sampling rates, dynamic range, time stamps, and on-wrist detection. For AF screening, pair Apple’s PPG flags with single-lead ECG confirmations in a run-in to establish PPV before primary enrollment. For sleep, restrict claims to total sleep time and efficiency unless you have PSG sub-studies. Your method section should cite a calibration cohort and a Bland–Altman style agreement analysis. Standardize reviewer language with PI terminology so site PIs sign off confidently.

Algorithm-level. Pre-register thresholds (e.g., HRV RMSSD windows), smoothing rules, and motion artifact exclusion. Add model drift checks at interim analyses. When mapping composite metrics (e.g., “stress score”), treat them as exploratory unless you possess strong prior validation. Train the CRA team using monitoring terms and align with sponsor data science leads you’ll likely find via the sponsors directory.

Context-of-use. Specify whether participants wear devices 24/7 or during task prompts (e.g., 6-minute walk proxies). If the target population is in India or Sub-Saharan Africa, harmonize BYOD feasibility with local LTE/Bluetooth reliability and leverage regional feasibility signals from Africa growth and India’s boom.

3) Trial design patterns that make wearables outperform clinics

Hybrid Decentralized RCT. Use Apple/Fitbit for passive endpoints plus app-delivered ePROs. Cluster in-person visits for biosamples/ECGs only. Recruit at scale using APAC capacity from site directories and balance with European quality while watching macro shocks like Brexit risk. Power calculations use variance reduction from high-frequency measures; your statistician can justify lower N than clinic-only designs.

Enrichment via digital phenotypes. Run a 14-day pre-randomization telemetry to identify responders/non-responders by HRV, activity, or sleep efficiency trends. This boosts effect sizes and preserves external validity. Calibrate thresholds against published VO₂max estimates and watch for confounding (temperature, illness). Align CRA checklists with monitoring terms and build sponsor alignment using US sponsor insights.

Adaptive triggers. Use continuous endpoints to drive sample-size re-estimation or arm-dropping when conditional power dips. Pre-specify interim cutoffs and guard against operational bias with blinded statistician workflows. When running across borders, maintain redundancy in CRO staffing using the world CRO list and distribute sites per country competitiveness.

Safety overlays. Use fall detection, irregular rhythm alerts, and respiratory-rate changes as signal-to-act, not as adjudicated SAEs unless protocolized. Build workflows with central medical monitors trained from the medical monitor/MSL questions and define escalation SLAs in vendor contracts.

What’s your biggest blocker to adding Apple/Fitbit endpoints?

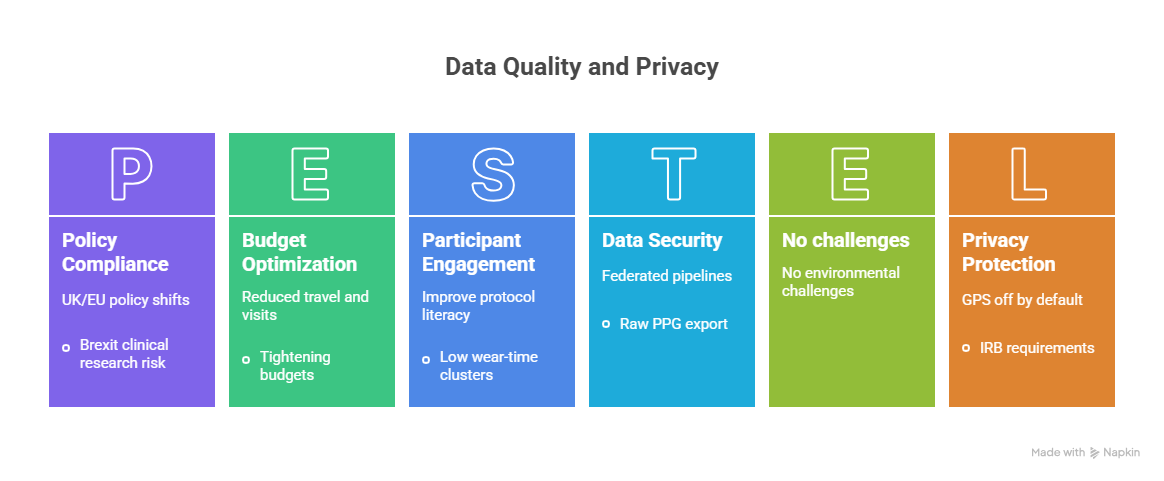

4) Data quality, privacy, and monitoring—how to avoid the traps that sink studies

Artifact control. Mandate sleep-window HRV instead of daytime noise; require on-wrist detection and minimum wear-time thresholds. Use change-point detection to exclude post-fever days. Document these in your SAP and align CRA checklists using monitoring terminology. For oversight capacity, plan staffing with the world CRO directory.

Missingness strategy. Pre-define MAR vs MNAR handling and use pattern-mixture models when adherence clusters (e.g., travel, illness). Trigger in-app nudges when wear-time dips below threshold. Train participants with micro-modules borrowed from test-taking strategy content to improve protocol literacy, then reinforce with study environment tips repurposed as participant learning bites.

Privacy & IRB. Keep GPS off by default unless justified in the risk–benefit statement. Minimize raw PPG export; store derived features when possible. Use federated or pseudonymized pipelines and restrict dashboards to role-based access. If running in the UK/EU, factor policy shifts analyzed in Brexit clinical research risk and hedge with APAC capacity from site directories.

Risk-based monitoring. Build signal-driven SDV: focus on protocol deviations, low wear-time clusters, and outlier drift. Define data quality KPIs (median nightly HRV variance, weekly missingness, on-wrist percentage) and tie them to a central-monitor escalation tree staffed by medical monitors trained via the MSL certification guide. When budgets tighten, show ROI using reduced travel and visit count benchmarks from country race analysis.

5) Implementation blueprint—BYOD, equity, budgets, and KPIs that sponsors respect

BYOD vs provisioned. BYOD accelerates enrollment where Apple/Fitbit penetration is high; provision devices where equity matters or networks are unreliable. In India and Africa, combine stipended provisioned devices with mobile data support, guided by growth signals in India’s boom and Africa opportunity. Partner with regional CROs from the global directory who already hold device SOPs.

Recruitment & retention. Advertise less travel, more flexibility, backed by telemetry-verified adherence bonuses. Use micro-goals (7-day streaks) and in-app motivational prompts that unlock small incentives. Pre-screen with ECG eligibility tasks for AF-adjacent studies and consolidate biosample days. Build caregiver modes for pediatric/geriatric arms. When recruiting clinicians and coordinators, cite compensation from the salary report and compare role-specific pay like CRC salaries and CRA salaries to set realistic budgets.

Participant training. Deliver 90-second videos on charging, strap fit, and sleep mode. Add checklist quizzes using cues adapted from exam anxiety guidance and test-taking strategies. For respiratory or infectious indications, include illness flagging prompts; for women’s health arms, align messaging to cycle-tracking limits and ask participants to confirm with clinical tests.

Equity by design. Budget for multi-size straps, skin-tone-aware PPG calibration plans, and language-localized apps. Run a usability pilot in the lowest-connectivity stratum. Use local CROs from the world directory and deploy sites where regulatory support and ethics turnaround are strong per country race insights.

Budgeting & vendor map. Expect incremental per-participant costs in: devices, replacements, data plans, integrations, and analytics. Offset by fewer in-person visits, shorter recruitment, and lower CRA travel. Use board-friendly deltas framed against historical N and visit schedules. For scale, combine centralized analytics with regional CRO execution as cataloged in the global CRO list.

Team upskilling. Speed onboarding with short certifications. For data-facing roles, steer staff to the MSL study guide and PI terminology via PI terms. For pharmacovigilance overlays in device-drug combos, read the PV salaries & growth report to understand talent markets before bidding.

6) FAQs — practical answers teams ask before green-lighting wearable endpoints

-

Stick to resting heart rate, activity minutes/steps, total sleep time/efficiency, and irregular rhythm notifications as safety screens rather than primaries. Treat HRV, VO₂max estimates, and sleep staging as key secondary/exploratory unless you run device-specific calibration sub-studies. To see who can execute these designs at scale, shortlist candidates from the global CRO directory and align site mix with APAC site capacity.

-

Hybrid. Use BYOD to accelerate recruitment where penetration is high; provision for underserved cohorts and network-limited regions. Budget for replacement devices, power banks, and data stipends. When opening sites in growth regions, pressure-test assumptions against India’s market and Africa opportunity, then lock execution with regional partners from the world CRO list.

-

Lead with context-of-use: define wear-time thresholds, artifact rules, and confirmatory measures. Provide agreement analyses against reference tools and a risk log covering false alarms (AF), privacy (GPS), and SpO₂ skin-tone bias. Use run-ins to tune thresholds before randomization. Align the narrative with sponsor expectations drawn from US sponsor insights and standardize reviewer language via PI terms.

-

Show: screen-to-randomize time, visit reductions per participant, adherence (on-wrist %), data completeness, CRA travel days avoided, and conditional power at interim. Compare against historical clinic-only baselines. When staffing plans cause hesitation, share compensation benchmarks from the salary report and role specifics like CRC salaries and CRA salaries.

-

Yes—passive adherence via on-wrist checks and adaptive prompts catches deviations early. Use behavioral economics: streak badges, nudge timing, and small, immediate rewards. For complex regimens, tie prompts to contextual signals (e.g., HR rise after dosing). Build central oversight with medical monitors prepared via the MSL guide and escalation rules aligned to monitoring terms.

-

Common failure points: loose straps, charging gaps, model updates mid-study, unclear artifact logic, and over-claiming exploratory metrics. Prevent with device SOPs, firmware freezing, training micro-modules, and calibration cohorts. If geopolitical risk reshapes your network, rebalance with countries highlighted in the clinical-trial race analysis and hedge EU/UK exposure using insights from Brexit impacts.

-

Add a digital endpoints lead, data quality engineer, and participant engagement PM. Upskill CRAs and PIs using PI terminology and the acronyms guide. For device-drug safety overlays, reference PV talent trends in the PV report to scope costs.