Data Monitoring Committee (DMC): Roles in Clinical Trials Explained

A Data Monitoring Committee (DMC) exists because trials don’t fail only from bad science—they fail from unchecked risk, biased decisions, and late discovery of safety or integrity problems. In modern clinical research, sponsors need a mechanism that can look at accumulating data without pressure to “make the trial work.” That’s what a DMC does: independent oversight that protects participants, protects credibility, and prevents a trial from drifting into preventable disaster. If you understand DMC roles, you’ll read protocols, interim plans, and safety narratives with a sharper, more employable mindset.

1. What a DMC Is and Why Trials Use One

A DMC is an independent group that reviews accumulating trial data—typically unblinded or partially unblinded—to recommend whether a study should continue as planned, be modified, pause, or stop. The key word is independent. A DMC is not a sponsor cheerleader and not a site problem-solver. It’s an oversight body whose value comes from being able to say “this isn’t safe,” “this isn’t credible,” or “this isn’t worth continuing,” even when money and timelines are screaming the opposite.

You’ll see DMCs in trials where participant risk is meaningful, endpoints are hard (mortality, irreversible outcomes, serious toxicity), or interim decisions could change real lives. If you’re building a career in safety-heavy tracks like pharmacovigilance or advancing through a drug safety specialist role, DMC logic is foundational because it shapes how safety signals are interpreted under uncertainty.

A DMC helps solve three painful, common trial problems:

First, ethical drift. When recruitment is slow and budgets are tight, teams unconsciously relax judgment: delayed AE follow-up, “we’ll fix it later” documentation, borderline eligibility. A DMC doesn’t manage sites, but its questions force discipline. That discipline protects PI accountability described in the principal investigator pathway and keeps oversight clean for sub investigators.

Second, data credibility collapse. Missing data, protocol deviations, site outliers, and endpoint drift can quietly destroy interpretability. DMC review pressures the operational and data teams to keep integrity high, which is why DMC conversations often intersect with clinical data management and clinical data coordinator workflows.

Third, biased stopping decisions. Without an independent body, interim looks can turn into self-fulfilling narratives: overreacting to noise, continuing too long out of sunk cost, or stopping early for a mirage effect. DMCs anchor those decisions to predefined rules, rigorous statistics, and participant protection. This mindset is valuable in leadership paths like clinical research project management and compliance-driven roles like quality assurance.

2. DMC Composition and Independence: Who Sits on It and Why That Matters

A DMC’s power comes from two things: expertise and independence. If either is compromised, the committee becomes a formality that adds cost without adding protection.

A typical DMC includes clinicians with disease-area expertise, a biostatistician who understands interim monitoring, and often specialists relevant to the risk profile (cardiology, infectious disease, oncology, pediatrics). The committee may also include an ethicist or patient-safety-focused expert when participant vulnerability is high. The reason this matters is simple: the DMC isn’t just looking for “bad numbers.” It’s interpreting clinical meaning under messy reality, which is why DMC members must be able to distinguish true harm signals from expected background events.

Independence is non-negotiable. DMC members should not have financial relationships that could bias judgment, and they should not be operationally entangled with the sponsor’s study team. If a DMC is perceived as biased, regulators can discount its recommendations and the study’s credibility suffers. That’s the same logic behind strong audit discipline in quality assurance and why documentation rigor matters across trial roles like clinical research administrators.

The DMC usually operates under a charter, not just the protocol. The charter defines membership, conflict-of-interest rules, meeting cadence, what data will be reviewed, how recommendations are made, and how confidentiality is protected. If you work in regulatory-facing tracks, understanding “charter logic” complements the thinking needed for regulatory affairs specialists and clinical regulatory specialists, because both disciplines live on process, role boundaries, and evidence.

A beginner trap is assuming the DMC “runs the trial.” It doesn’t. It provides oversight and recommendations. Operational execution still sits with the sponsor, CRO, and sites—where roles like clinical trial assistants, clinical research assistants, and data teams like clinical data managers do the day-to-day work that determines whether interim data is even trustworthy.

If you’re aiming to become the person who “understands the whole trial,” DMC knowledge is a shortcut. It forces you to think like a decision-maker rather than a task executor, which is exactly how people move toward higher responsibility roles in clinical research ladders like the top high-paying clinical research jobs and leadership pathways such as clinical research project management.

3. What the DMC Actually Reviews: Safety, Efficacy, Integrity, and “Hidden” Risks

The DMC doesn’t just scan AE tables and call it a day. A strong DMC review is layered: participant safety, emerging benefit, and whether the data is credible enough to justify continuing.

Safety review: the heartbeat of DMC oversight

The DMC looks at serious adverse events, adverse events of special interest, discontinuations, dose interruptions, lab signals, and deaths—often by arm. They pay attention to timing (early vs late), clustering (site-specific spikes), and patterns that suggest mechanism-driven toxicity rather than random noise. This is the same reasoning used in safety careers like pharmacovigilance and drug safety specialist, where the challenge is separating signal from background rates.

A DMC also cares about the denominator problem: counts mean little without exposure context. If one arm has higher discontinuation, AE counts can look artificially lower because participants stop being observed. This is where DMC thinking overlaps with data discipline roles like lead clinical data analyst and broader data integrity ecosystems like clinical data management platforms.

Efficacy review: controlled excitement, not wishful thinking

If interim efficacy is planned, the DMC reviews whether the trend is strong enough to meet boundaries and whether the estimate is stable. They look at effect size, confidence intervals, and subgroup stability. They treat early “wins” as fragile unless the data is consistent, endpoints are clean, and missingness is not differential.

This is why operational integrity matters so much. If endpoints are inconsistently measured across sites, efficacy becomes unreliable. That’s why DMC discussions often trigger operational tightening—more training, better monitoring focus, improved data cleaning—touching teams like clinical research assistants and study support functions like clinical research administrators.

Integrity review: the unglamorous layer that saves trials

This is where DMCs add massive value that beginners underestimate. DMCs look for signs that the trial is becoming uninterpretable even if no dramatic safety signal exists. Examples include rising missing data, noncompliance patterns, endpoint ascertainment drift, unusual site distributions, and data lag that makes interim looks misleading.

In modern trials, remote and hybrid oversight tools are common, but tools don’t solve judgment problems. If you want to understand the monitoring ecosystem that feeds DMC packages, review infrastructure topics like remote clinical trial monitoring tools and recruitment pressures through patient recruitment companies and tech. DMC oversight is only as good as the data pipeline it receives, which ties back to the daily discipline of site and data teams.

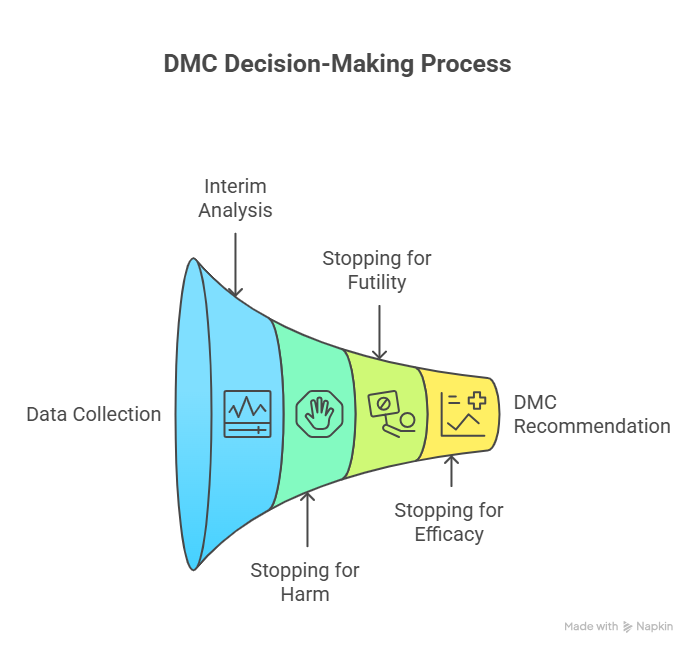

4. How DMC Decisions Work: Interim Analyses, Stopping Rules, and Recommendations

This is where most professionals misunderstand the DMC: they think it’s a group that “votes based on vibe.” A real DMC follows structured decision frameworks designed to prevent predictable human errors.

Interim analysis isn’t “peeking”—it’s controlled monitoring

Interim looks are pre-specified in the protocol or statistical analysis plan and operationalized in the DMC charter. Each interim look has rules designed to control error. If you look at data repeatedly without proper boundaries, you increase the probability of calling a random fluctuation “significant.” That is why alpha spending and stopping boundaries exist.

If you’re building statistical literacy as part of your career growth, the same “structured thinking” improves your performance in data-facing roles like clinical data manager and safety-facing tracks like pharmacovigilance specialist salary and growth, because both require stable interpretation under uncertainty.

Stopping for harm: the cleanest decision, but still hard

Stopping for safety sounds straightforward, but it’s not always dramatic. Many signals begin as subtle increases in a class of events, or a pattern in vulnerable subgroups, or an imbalance that looks small but clinically alarming. The DMC evaluates whether the pattern is plausible, consistent, and serious enough that continuing is unethical.

This is where DMC thinking aligns with real-world safety escalation logic used in pharmacovigilance manager decision-making. You’re not only counting events; you’re assessing clinical meaning, exposure, and risk tolerance under real-world uncertainty.

Stopping for futility: the decision that saves years

Futility stopping exists because continuing a trial that cannot succeed wastes money and exposes participants to burden without benefit. DMCs may review conditional power and trend stability. Futility decisions are painful because teams confuse “we hope it turns around” with “it can still meet its objective.” DMCs force that distinction.

Operationally, futility often correlates with feasibility failure: recruitment gaps, high dropout, endpoint measurement drift. Those are execution problems that roles like clinical trial assistants and clinical research administrators fight daily, and which DMC dashboards can surface early.

Stopping for overwhelming efficacy: the decision that can backfire

Early stopping for benefit can save lives, but it can also create fragile evidence. Early effects can be exaggerated, and long-term safety may be under-characterized. That’s why DMCs examine not just p-values but effect size stability, confidence intervals, and whether the endpoint is truly meaningful.

When DMCs recommend stopping early for benefit, they must ensure the decision won’t later be viewed as premature or biased. This is where quality discipline and regulator readiness—skills covered in QA specialist pathways and regulatory affairs careers—become critical to how the decision is documented and defended.

Recommendations: precise, structured, and confidentiality-safe

A DMC recommendation letter typically says: continue without change, continue with modifications, pause enrollment, or stop the trial. The letter must be actionable but cannot leak unblinded information. This is a tightrope: too vague and it’s useless; too detailed and it contaminates the trial. Professionals who master this communication style become valuable in cross-functional roles like clinical medical advisor and medical science liaison, because they learn to communicate truth under constraints.

5. DMC Charter and Operations: Meetings, Data Packages, and Communication Rules

The charter is the DMC’s operating system. If the charter is weak, DMC oversight becomes chaotic. If the charter is strong, it prevents predictable failures: biased decision-making, confused responsibilities, and data access problems that cause operational contamination.

Meeting structure: open and closed sessions

Most DMCs use open sessions to discuss operational conduct without unblinded details: recruitment, adherence, protocol deviations, data lag, and feasibility. Closed sessions are for unblinded safety and interim efficacy. This division protects the integrity of the trial by ensuring people who need operational information don’t accidentally get unblinded insight that changes behavior.

This is especially relevant in environments where remote monitoring is common and more people have access to dashboards and reports. To understand the systems that create these pressures, explore operational ecosystems like remote trial monitoring tools and data platform ecosystems like clinical data management and EDC platforms.

The data package: where trials live or die

A DMC can’t make good recommendations with late or inconsistent data. The DMC package needs consistent definitions, clear denominators, stable cut-off dates, and transparent handling of missingness. If the data package is messy, the DMC becomes cautious, and cautious oversight often translates to more scrutiny and fewer “quick approvals” to continue.

This is why the CRC/CTA/data trio matters even if they never speak to the DMC. Site conduct drives data reliability; data reliability drives DMC confidence; DMC confidence shapes sponsor decisions. That chain connects operational roles like clinical trial assistant and data roles like clinical data coordinator to governance-level outcomes.

Communication boundaries: protecting integrity

A DMC should not negotiate with the sponsor, and the sponsor should not pressure DMC members. Communication is usually channeled through a neutral DMC coordinator or a designated contact. The sponsor receives recommendations and implements changes through proper protocol amendments, training updates, and operational plans.

This is where regulatory and quality frameworks matter, because the documentation of decisions becomes part of the trial’s defensibility. If you want to understand the mindset that makes these systems work, study the role logic behind regulatory affairs associates and clinical regulatory specialists, and the compliance rigor enforced by quality assurance specialists.

Why DMC knowledge boosts your career value

Understanding DMC oversight turns you into someone who thinks in trial risk, evidence credibility, and decision defensibility—not just tasks. That perspective is valuable whether you’re moving into safety like pharmacovigilance, into sponsor-facing medical roles like clinical medical advisor, or into program leadership. If you’re studying and want to convert this into exam performance, pair the concepts with test-taking strategies and a stronger routine using the study environment guide.

6. FAQs

-

People often use the terms interchangeably, but what matters is scope and function. Both refer to independent oversight that reviews accumulating data to protect participants and preserve credibility. The key is how the charter defines responsibilities, data access, and recommendation pathways. If you understand charter boundaries, you’ll also understand why quality discipline matters in roles like QA specialist and why process clarity is valued in regulatory affairs. The name matters less than whether independence and confidentiality are truly protected.

-

Typically, DMC members and a small statistical support function can see unblinded data, depending on the trial’s risk and monitoring plan. The restriction exists because unblinded knowledge changes behavior—site decisions, recruitment emphasis, protocol adherence, and even reporting patterns. That creates bias that can invalidate results. This is why DMC confidentiality is treated as a core integrity safeguard, similar to how data integrity is protected by clinical data management teams and why documentation rigor is enforced in clinical research administration.

-

A sponsor can technically make its own decisions, but ignoring a credible DMC recommendation creates serious ethical and credibility risk. If the DMC recommends stopping for harm and the sponsor continues, the sponsor must justify that decision, and the trial’s integrity can be questioned. This is why trials rely on clear governance, and why compliance mindsets from quality assurance and regulator-facing roles like clinical regulatory specialists matter in how these decisions are documented.

-

Operational teams can become desensitized to slow-moving threats: rising missing data, site outliers, endpoint drift, and differential discontinuation patterns. DMCs are structured to see those patterns early and ask uncomfortable questions before the trial becomes unrecoverable. This intersects heavily with data-focused roles like clinical data coordinator and advanced tracks like lead clinical data analyst, because pattern recognition is where credibility is won or lost.

-

They use predefined interim monitoring plans, statistical boundaries, and skepticism about instability. They examine effect size, confidence intervals, missingness, and whether results are consistent across sites and time. They also look for operational causes that can fake efficacy, such as unblinding, differential follow-up, or endpoint measurement inconsistency. This is why DMC oversight is closely linked to strong monitoring ecosystems like remote monitoring tools and clean data pipelines supported by EDC platforms.

-

Safety, data, regulatory, and leadership tracks benefit heavily. If you’re moving into safety, DMC logic strengthens your signal assessment skills used in pharmacovigilance and higher responsibility roles like pharmacovigilance manager. If you’re data-focused, it improves how you prioritize critical data elements, aligning with clinical data management. If you’re regulatory-facing, it strengthens your governance instincts, aligning with regulatory affairs associate and clinical regulatory specialist paths.

-

Study DMC oversight as a decision framework: what data is reviewed, what rules guide interim decisions, how confidentiality prevents bias, and how recommendations protect participants and integrity. Then practice applying those rules to scenarios. Build your routine with proven test-taking strategies and reduce distractions using the perfect study environment guide. When you can explain DMC decisions in plain language, you’re exam-ready and job-ready.